Work With Perth’s Leading SEO Consultant

The SEO industry is a minefield. If you have tried and failed with the big SEO agencies before, it’s time to try something new. By working with me, you get increased accountability, transparency, and direct access to the SEO specialist responsible sole person for your results.

PERTH’S #1 SEO EXPERT

SEO Services For Businesses Who Want Results Now.

Whether you are looking to protect your position or become an authority in your industry, investing intelligently in a comprehensive organic search strategy is the key to ensuring the success of your online growth.

Know that working with one of Perth’s most reputable Search Engine Optimisation experts protects your website and rankings. We have worked with ASX-listed companies and some of Perth’s top digital marketing agencies.

Let’s Talk About SEO

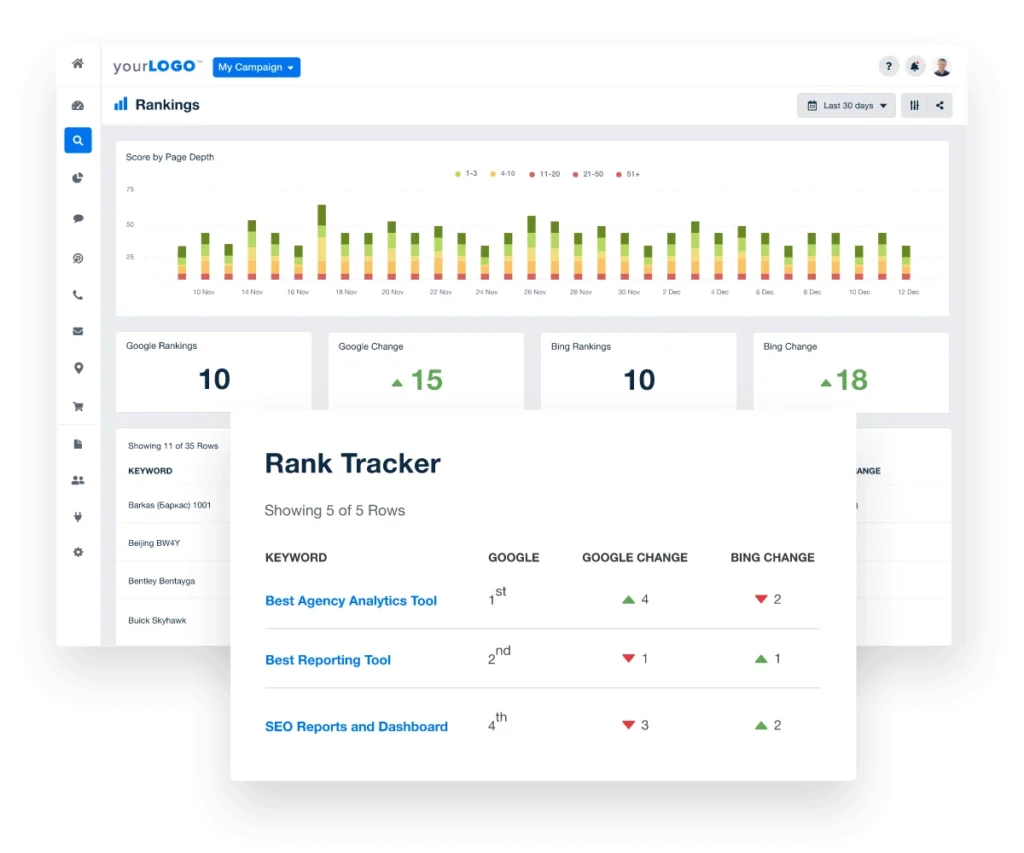

SEARCH ENGINE OPTIMISATION TOOLS

HIRING SEO AGENCIES IN PERTH

Work With An SEO Expert

Avoid the agency trap and partner with Perth SEO Agency. Become one of a select few with direct access to an industry-leading search engine optimisation professional. I devise personalised, tailored organic search strategies focusing on what you need to achieve measurable results.

Work with the Perth SEO Agency, and your SEO campaign will get the attention to detail that other SEO agencies cannot offer. To rank your website #1 in Google’s search results, you need to work on three core areas every month – Domain Authority, Technical Integrity, and Relevancy. This involves optimising the following areas:

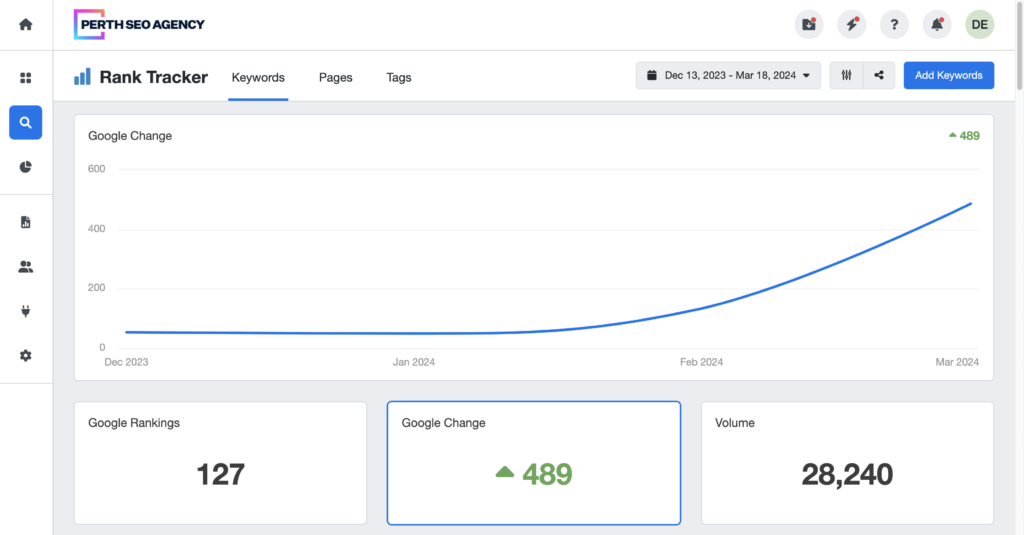

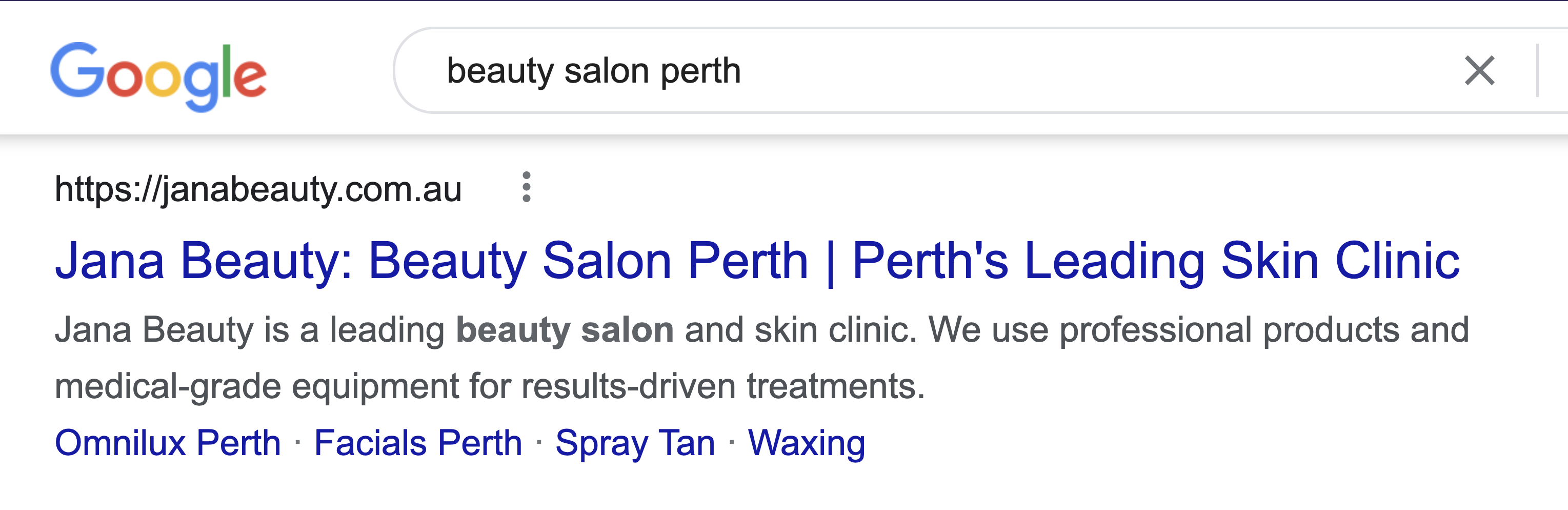

SEO Client Testimonials

“Daniel was able to get us to position one within 3 months for terms like Beauty Salon Perth and extraction facials. We also reached page one for treatment keywords like IPL Perth which are highly competitive in the beauty industry. The results have been incredible. Both our in-store and online eCommerce sales have increased dramatically. ” – Jana from Jana Beauty in Subiaco

Search Engine Optimisation Strategies

Ranking #1 on Google Requires a Comprehensive Strategy

After an in-depth audit of your website, I put together a campaign roadmap containing the tasks that need to be executed and when they will be completed.

We also analyse the search results to determine what the top-ranking websites have in common. This includes the structure of content, topics discussed, and how they’ve leveraged internal links and offsite SEO, to name a few. I also review any work and reports done by other SEO agencies, as mistakes can be costly and hard to identify.

Off-Page SEO (Link Building)

What is Link Building?

Off-page SEO is the optimisation of the ranking factors outside of the website. Off-page SEO and link building focus on optimising a website’s backlink profile.

Search engine algorithms identify the links that point to websites to determine their credibility. Links from other websites pass on what is called “PageRank”, which is the link equity (value) passed to a web page.

Link Building is the Key to Ranking #1 on Google

Perth SEO Agency specialises in link building through outreach and digital PR. This is possible only through a network of contacts across various publishing niches.

Link building is one of the more difficult areas of search engine optimisation. Few Perth SEO agencies get this right, and more often than not, they buy cheap links through paid services.

The Various Methods of Link Building

- Outreach

- Digital PR & Content Marketing

- Guest Posting

- Citation Building

Digital PR For SEO

Link building is the process of acquiring inbound links from other websites. Links are acquired through digital PR campaigns.

A digital PR link building strategy relies on producing valuable content and syndicating it via press releases and organic outreach.

Outreach Link Building

One of the most effective link building methods is outreach link building. Building links via outreach is hard. It’s a highly specialised skill and extremely time-consuming. That’s why so few SEO agencies in Perth do it.

When it comes to link building it’s all about who you know. Outreach link building is relationship-based. By having a good working relationship with publishers, online journalists, and bloggers, I am able to get links from authoritative sites.

On-Site Technical SEO

What is Technical SEO?

Technical SEO is the optimisation of non-content elements on a website. Technical SEO involves optimising a website’s code and server so that search engines can effectively crawl, index, and understand its content.

- Core Web Vitals (Speed)

- Mobile-Friendliness

- Crawl Budget

- Security (SSL)

- Sitemap and Robots.txt

- Structured Data Markup

How We Leverage Technical SEO

With a comprehensive knowledge of technical on-site optimisation and a broad range of development capabilities, no technical SEO issue will go unresolved.

Content Optimisation

What is Content Optimisation?

Content optimisation is the process of writing and structuring content so that search engines determine it to be the best resolution to the search intent of its users and therefore rank it higher in search results.

- Content Auditing

- Entity Analysis

- Semantically Structured Content

- Google Natural Language (NLP) Processing

- Content Briefs

- Keyword Research

Using Data Analysis to Write Content For SEO

Using Google’s Natural Language Processing (NLP) algorithm, I analyse the topics and keywords that Google identifies on the web pages of your top-ranking competitors. I then use this data to evaluate how well relevant topics are covered in your content. Following this, I prepare a content brief and writing guidelines that specify which topics need to be covered and the context they are to appear in.

My content optimisation process is unique. It uses my own custom python script paired with Google’s publicly available (but rarely utilised) Natural Programming algorithm.

How SEO Content is Written

First and foremost, your content needs to convert users into paying customers. If your agency has sold you that your website needs bulk content stuffed with keywords, this is not the case. How I produce content:

- Write for people first, search second.

- I don’t do keyword stuffing.

- Conversion-focused content is key.

Who Will Write My Website Content?

I work with a handful of content specialists who specialise in different industries. That is why your content needs to be written by someone with experience in your industry, a person who will understand the unique value proposition of your business. For example, the content produced for Kimberley Cruises has seen a significant increase in traffic.

COMPLETE TRANSPARENCY & ACCOUNTABILITY

Comprehensive SEO Strategy

1) SEO Audit

An in-depth analysis of your website’s content, technical integrity and backlink profile. In addition, I will review what previous SEO agencies may have carried out past work, as this can often be the cause of issues.

The audit of your website and current organic performance will be used to define the campaign’s deliverables. Following this, you will receive a report that details the state of your off-page and on-site SEO.

2) SEO Strategy

Your SEO strategy will define a list of specific SEO deliverables and tasks. No fluff, padding, or anything you don’t need. The deliverables defined in your SEO strategy will focus on the technical integrity of your website, optimising the content and metadata, and increasing your website’s authority through a bespoke link building campaign.

The strategy will include all link building (Digital PR), on-site technical SEO, content optimisation and development.

3) SEO Campaign

Your SEO campaign will work to a set campaign timeline. This means you will know what SEO tasks are being performed and when. No changes will be made to your website without your consent.

Following the inception of your SEO campaign, we will work as a team to stick to the campaign timeline and execute the predefined SEO tasks and deliverables. Whether you wish to sign off on changes is up to you, and we can work to your schedule.

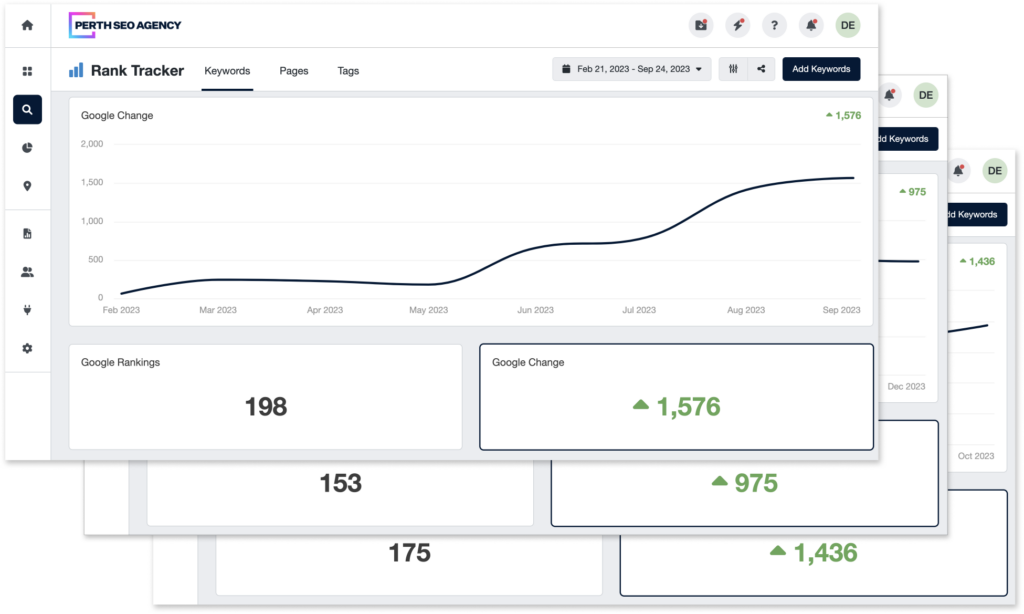

Driving Business Growth With SEO

Outmanoeuvre your competition by implementing a superior strategy. To outrank your competition, you must build more links, present better content, and have a flawless site.

As an industry-leading SEO consultant, I have worked with a wide range of businesses, from small businesses to eCommerce stores and enterprise ASX-listed companies. I have achieved results in a wide range of industries, including finance, property, beauty salons. I have achieved tremendous results for cosmetic surgery clinics, increasing the amount of procedure bookings for liposuction surgery, eyelid surgery, and botox injections in Perth.

There are hundreds of SEO experts. Most of whom operate with no accountability for results or deliverables. By partnering with an SEO consultant, you have a direct line to the person carrying out the work. All work carried out is reported on a monthly basis. You will never have to chase up a response or wonder where your investment in SEO is going.